On Summer 2010 I was approached by my HCI instructor, I have already told him about my interest in the area and that I feel good to go and start working on some projects. My instructor expertise relies on Data and Scientific Visualization and he had something beign cooked at the moment. A fellow student was working in a independent agents program that visualizes several objects in a 3D space with a very dynamic behavior inspired by nature: they tend to group and navigate in this space just like flocks of birds, swarm of insects and banks of fishes do, and they can react to their environment in different ways.

This “Boids” algorithm is very popular and is used in several graphical applications like videogames and movies due to its approximation to the real behavior of these animals without necessarily simulating the way nature does, all with very simple algorithm that is not heavy computationally speaking. Being it for real time or pre rendered presentations the technique really outstanding.

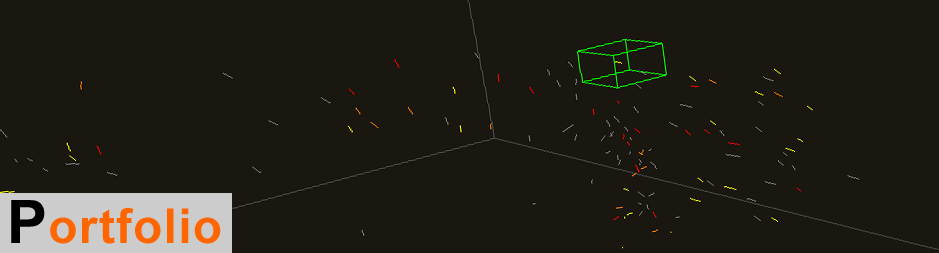

Well, I jumped into the project and did some optimization to the code and developed some interactions that were needed to further use the application in experimental setups, later we added 3D stereo to it using active shutter glasses, and let me tell you, watching thousands of elements floating out of the screen can be pretty amazing.

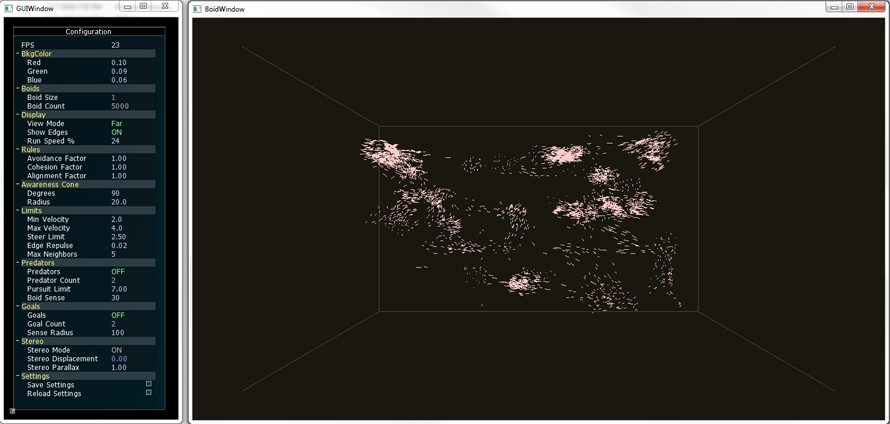

The previous picture shows about five thousand elements forming groups on 3D space. You could put other objects that can be either attract (food) or repel (predators) the boids, and you will see them reacting exactly as animals with similar behavior will do. Everything was done using C++, WinAPI and OpenGL. Project was finished and ready to go.

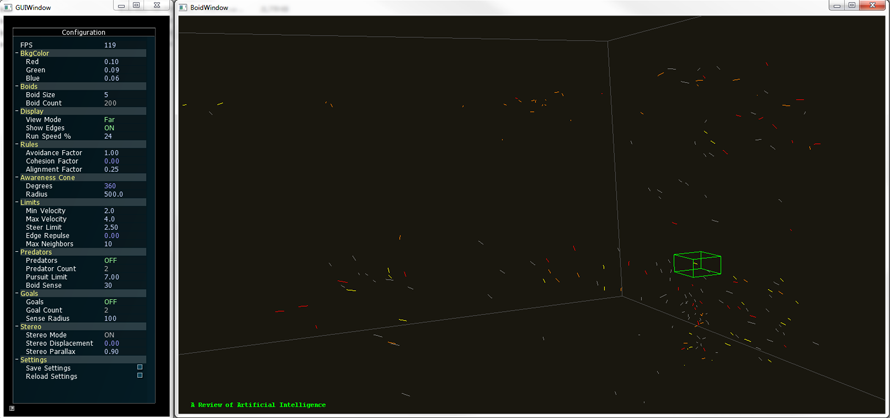

Fast-forward to 2012, a theoretical research was going on based on this technique: What if each element metaphorically represented a document? Well yes it would be a mess, but what if we can actually sort them out by some factor of similarity? No we are talking; we could visualize big structures of documents grouped together in clusters according to its similarity.

In order to create such a thing now we needed a parser module that read documents like PDF files and create a data structure that provides the similarity between each of them. That was done entirely by a partner while I was focusing in the visualization itself.

As the paper describes the idea was to create a 4th rule to the Boids Algorithm that basically will direct the element to get nearer other similar elements. There are several ways to do this and they are described on the publication. We applied that idea along a modified algorithm designed by myself that easily takes the similarity values into cohesion like behavior. The results were pretty impressive after some tweaking on the other rules.

To provide more insight to the user on the visualization we developed a coloring code were the user simply selects a keyword and the documents that contain that keyword would be highlighted with a user selected color. Going beyond that, the user could select an element to show the title of that particular document and even open it from that interface. We also added freeform movement and other interactions that let the user move around the 3D space.

In other words, we developed a whole different way to browse documents and the application served as a proof of concept for a different take on visualizing documents. And we didn’t stop there. We went a step ahead an incorporated Kinect support. Why? Because it can augment perception and overall experience of the visualization and because it’s fun.

So we proceed to add head tracking coupled with 3D stereo and projected it using two high definition stereoscopic projectors. The results were amazing. Here’s a quick video that I made on my tablet, it shows the use of both the regular desktop interfaces and the Kinect one:

Imagine, head tracking changes perspective according to your body movements and the 3D glasses producing a good depth effect, it is exactly as if you were watching all these elements interacting though a real window.

I would like to thanks all the amazing Visualization team that I was part of during the Summer of 2012.